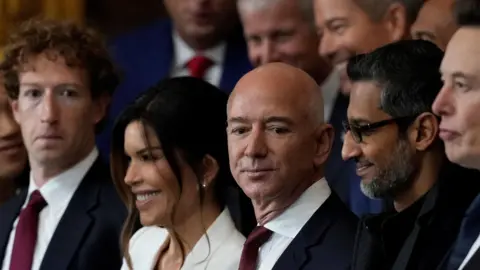

Elon Musk's AI model Grok will no longer be able to edit photos of real people to show them in revealing clothing in jurisdictions where it is illegal, after widespread concern over sexualised AI deepfakes.

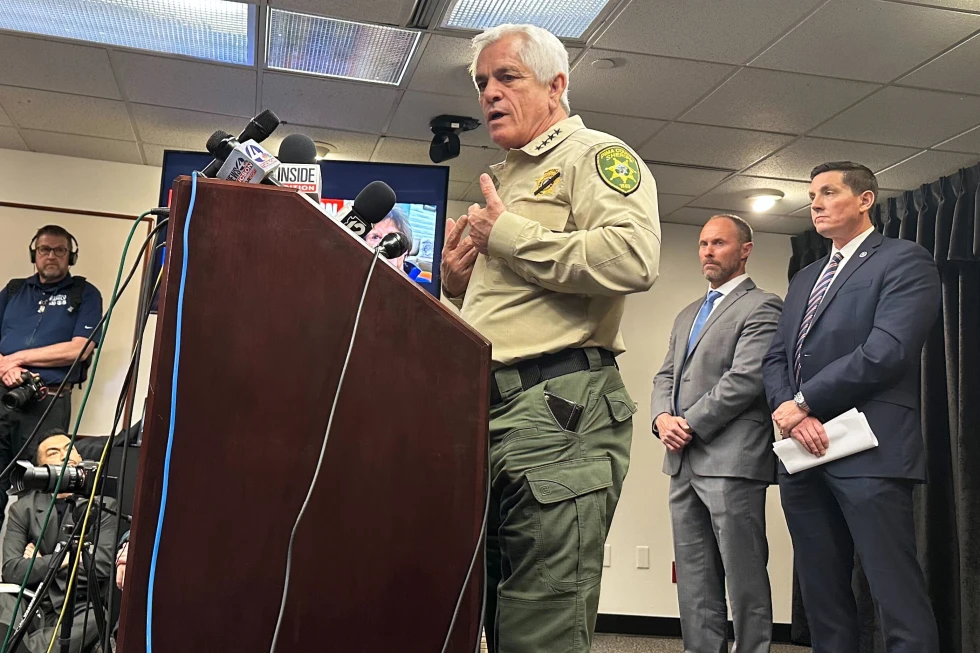

The announcement was made following California's top prosecutor's statement about an investigation into the model's use, particularly regarding images involving children.

X stated, We have implemented technological measures to prevent the Grok account from allowing the editing of images of real people in revealing clothing such as bikinis. This new policy affects all users, including those who subscribe to premium services.

In a step to further enforce these rules, X has announced a geo-block preventing users from generating explicit content featuring real individuals in certain regions where these practices are prohibited.

As part of its updated policies, X reiterates that only paying subscribers will be able to use Grok for photo editing, aiming to improve accountability and compliance with local laws.

Musk has noted that while Grok will allow 'upper body nudity of imaginary adult humans (not real ones)' under NSFW settings, the policies will vary according to local legislation.

Countries like Malaysia and Indonesia have already begun to ban Grok due to complaints of explicit images being created without consent. In the UK, regulators are investigating whether X complies with laws concerning the depiction of sexual images.

This decision reflects a broader trend of scrutiny over AI technologies, as warnings mounted against the misuse of such tools for harassment and unauthorized manipulation of personal images.