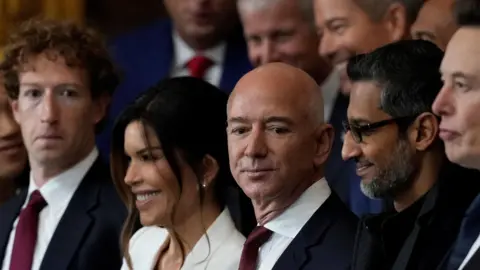

On February 20, 2026, India will implement new rules that require social media companies to remove unlawful content within three hours of being notified. This constitutes a drastic reduction from the previous 36-hour period. The regulation applies to significant platforms like Meta, YouTube, and X, as well as artificial intelligence-generated content.

The Indian government has not provided a clear rationale for shortening this timeframe, but critics are concerned that it signifies a broader strategy to tighten control over online discourse. They fear it could lead to censorship in a nation with over a billion internet users.

In recent years, the Indian government has utilized existing laws to compel social media firms to eliminate content considered illegal under statutes related to national security and public order. This has resulted in over 28,000 URLs being blocked at the behest of the government in 2024, as reported by transparency documents.

The amendments also impose guidelines on AI-generated content. For the first time, the law delineates what constitutes AI-generated material, requiring platforms to label such content and use automated tools to identify and eliminate illegal AI content.

Experts and digital rights advocates have raised concerns regarding the feasibility of enforcing these new regulations, with many arguing that the stringent timeline may lead platforms to resort to automated removals rather than thorough human reviews, thus risking censorship.

Critics, including digital rights organizations, have labeled the new requirements potentially the most stringent takedown regulations faced by any democracy, warning that without effective human oversight, there could be rampant censorship.

As these new rules come into effect, the implications for freedom of expression and digital rights in India remain a focal point of discussion.